Jan 30, 2026

Data Classification Policy: Definition, Controls, and Audit Expectations

By Fraxtional LLC

When teams first search for a data classification policy, it is rarely academic curiosity. It usually follows a near miss, an audit question that stalled an answer, or a growing realization that sensitive data exists across systems with uneven controls and unclear ownership. Leaders want clarity on what data exists, who is accountable for it, and how it should be handled under regulatory scrutiny.

A well-defined data classification policy addresses that uncertainty by setting enforceable rules for how information is categorized, accessed, and protected across the organization. The stakes are tangible. The average cost of a data breach reached $4.44 million globally, with U.S. incidents averaging $10.22 million, reflecting regulatory penalties, legal exposure, and remediation costs tied to sensitive data handling. Those costs rise when organizations cannot quickly identify which data was exposed and which rules applied.

In this guide, we break down how data classification policies work in practice, what regulators and auditors expect to see, and how to structure a policy that stands up during audits, incidents, and growth.

Key Takeaways

- Data classification policies convert legal exposure into enforceable handling rules that regulators, auditors, and sponsor banks actively test during audits, incidents, and supervisory reviews.

- Classification levels must drive materially different controls for access, encryption, logging, retention, and sharing, or policies fail when auditors test systems and workflows.

- Regulators expect named data owners to approve classifications, document exceptions, accept residual risk, and produce evidence showing decisions were reviewed and enforced.

- During incidents, data classification determines notification obligations, reporting timelines, and regulatory response based on exposed data types rather than affected systems.

- Policy templates are frequently flagged when classification decisions, reclassification triggers, and controls do not align with actual data flows, vendors, and operational practices.

What Is a Data Classification Policy?

A data classification policy is a formal control document that defines how an organization categorizes information based on risk, legal exposure, and business impact, then binds each category to mandatory handling, access, and protection requirements that auditors and regulators can test.

What a Data Classification Policy Should Include

- Policy Purpose and Risk Objective: States why classification exists, such as reducing regulatory exposure, protecting regulated data sets, and creating enforceable handling standards tied to enterprise risk appetite.

- Defined Classification Levels: Establishes fixed categories such as Public, Internal, Confidential, and Restricted, each mapped to measurable impact from unauthorized disclosure, alteration, or loss.

- Classification Criteria: Specifies how data is classified using factors like regulatory coverage, sensitivity of data elements, aggregation risk, financial impact, and reputational exposure.

- Data Ownership Accountability: Assigns executive ownership for classification decisions, approvals, and acceptance of residual risk when exceptions occur.

- Handling and Access Requirements: Links each classification level to required controls such as access restrictions, authentication standards, encryption expectations, and approved sharing methods.

- Lifecycle and Reclassification Rules: Defines when data must be reviewed, reclassified, or downgraded due to aggregation, system migration, retention expiry, or changes in use.

- Exception and Escalation Process: Documents how deviations from classification rules are approved, recorded, time-bound, and reviewed during audits.

- Review and Governance Cadence: Sets mandatory review intervals and identifies who validates ongoing alignment with regulatory, audit, and operational expectations.

A data classification policy converts abstract data risk into enforceable controls that regulators, auditors, and investors can validate. Without this structure, data handling remains inconsistent and difficult to defend under examination.

Why Data Classification Policies Matter for Regulated Businesses

In regulated environments, a data classification policy functions as a control boundary that determines how information is governed, audited, and defended under regulatory examination, enforcement actions, and investor due diligence.

- Regulatory Attribution Clarity: Classification links specific data sets to governing laws such as GLBA, HIPAA, PCI DSS, or state privacy statutes, allowing regulators to trace obligations to defined controls rather than inferred practices.

- Audit Evidence Readiness: Examiners expect documented proof showing how data was classified, when it was reviewed, and which controls applied at that time. Classification provides the reference layer that auditors validate first.

- Risk Containment During Incidents: When an incident occurs, classification determines reportability thresholds, notification scope, and regulator response timelines based on the data involved rather than system location.

- Sponsor Bank and Partner Confidence: Banks and payment partners assess classification rigor to determine whether downstream data handling meets their supervisory obligations and risk tolerance.

- Capital and Transaction Scrutiny: Investors and acquirers review classification programs to identify latent compliance exposure tied to customer data, financial records, or operational intelligence.

For regulated businesses, data classification policies define regulatory defensibility. Without them, audits, incidents, and transactions expose unbounded data risk that cannot be credibly explained or contained.

Data classification policies fail when ownership, approvals, and audit evidence are unclear. Fraxtional supplies senior compliance leadership to take responsibility without a full-time hire. Get in touch with us!

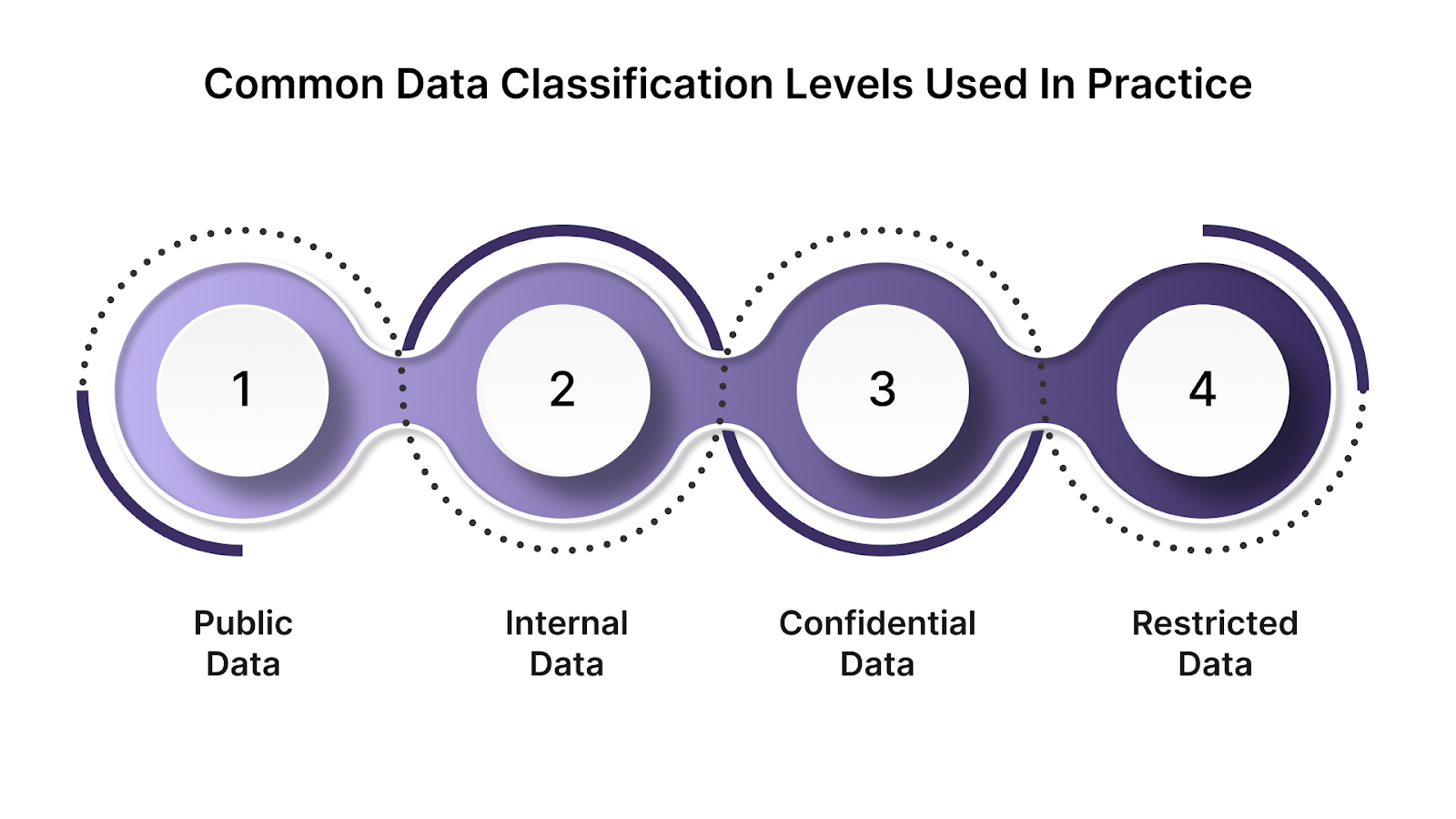

Common Data Classification Levels Used in Practice

Most regulated organizations rely on a small number of classification levels to create enforceable handling rules tied to legal exposure, audit scope, and incident response thresholds. Each level maps data risk to specific control requirements that regulators can verify.

1. Public Data

Public data is information approved for external release where unauthorized disclosure, alteration, or loss creates negligible regulatory or financial exposure.

Typical Data Included: Published policies, marketing content, press releases, job postings, and approved public financial summaries.

- Release Approval Criteria: Document who authorizes public designation and require confirmation that no regulated data elements are present.

- Integrity Controls: Apply version control and change tracking to prevent unauthorized modification of externally published content.

- Reclassification Triggers: Require review when public data is combined with non-public datasets or reused in internal analysis.

2. Internal Data

Internal data supports business operations and is restricted to employees and approved contractors due to operational, contractual, or reputational sensitivity.

Typical Data Included: Internal SOPs, process documentation, operational dashboards, non-public vendor contracts, internal communications.

- Access Boundaries: Limit access through role-based permissions aligned to job function and system ownership.

- Sharing Constraints: Prohibit external distribution without documented approval from the data owner or compliance lead.

- Periodic Review: Reassess classification during system migrations, vendor onboarding, or changes in data use.

3. Confidential Data

Confidential data includes regulated or business-critical information where unauthorized disclosure creates reportable risk or contractual breach.

Typical Data Included: Customer account records, regulated PII, transaction histories, audit workpapers, and non-public financial reports.

- Control Requirements: Mandate encryption in storage and transmission, access logging, and documented access reviews.

- Regulatory Mapping: Explicitly tie datasets to governing rules such as GLBA, HIPAA, or state privacy laws.

- Incident Escalation: Define mandatory notification paths and timelines when confidentiality is compromised.

4. Restricted Data

Restricted data represents the highest risk category and includes information where exposure results in significant regulatory penalties, enforcement actions, or material harm.

Typical Data Included: Social Security numbers, full payment card data, cryptographic keys, authentication secrets, law enforcement data, unreleased financial results.

- Strict Access Authorization: Require named approval, multi-factor authentication, and formal justification for access.

- Use Limitations: Constrain processing, export, and replication to approved systems and documented purposes.

- Lifecycle Controls: Enforce defined retention limits, secure destruction methods, and evidence retention for audits.

If your data governance or classification policies need senior ownership, audit-ready structure, and regulator credibility without adding full-time headcount, Fraxtional provides fractional compliance leadership built for regulated growth.

Roles and Accountability in an Information Classification Policy

An information classification policy only holds up under audit when accountability is explicitly assigned, decision authority is documented, and each role is tied to specific, testable actions across the data lifecycle.

Clear role separation converts classification from intent into evidence. When accountability is explicit, classification decisions remain defensible under audit, incident review, and regulatory scrutiny.

Aligning data handling and access controls with payment security expectations is critical for reducing audit risk. This guide explains How to Prepare for a PCI Audit: Expert Tips for 2026, with expert-led recommendations.

Data Classification Policy Examples for Key Industries

Data classification policies differ by industry because regulatory scope, supervisory expectations, and data risk profiles dictate how classification thresholds are defined and enforced in practice.

- Financial Services and Fintech: Customer identifiers, transaction records, and credit data are classified as Confidential or Restricted due to GLBA, state privacy laws, and sponsor bank oversight, with strict access logging and breach notification requirements.

- Crypto and Digital Asset Firms: Wallet identifiers, private keys, transaction metadata, and customer verification records fall under Restricted or High Risk classifications, driven by AML obligations, custody risk, and heightened supervisory scrutiny.

- Healthcare and Health Technology: Patient records, diagnostic data, and insurance identifiers are classified as Confidential or Restricted under HIPAA, with mandatory encryption, access audits, and minimum necessary use controls.

- Payments and Card Processing: Cardholder data environments classify PANs, CVVs, and authentication data as Restricted to meet PCI DSS requirements, enforcing segmentation, tokenization, and limited personnel access.

- Public Sector and Regulated Programs: Criminal justice data, tax records, and student information are classified at the highest risk tiers based on statutory protection, requiring controlled environments and documented authorization.

Industry-specific classification reflects legal exposure, not preference. Policies that align classification levels to sector regulations withstand audits, supervisory reviews, and enforcement actions.

Strengthen governance decisions by pairing policy design with structured risk evaluation. Learn How to Conduct a Qualitative Risk Assessment for Fintech & Crypto Firms.

Sample Structure for a Data Classification Policy

A defensible data classification policy follows a fixed structure that regulators, auditors, and counterparties can trace from risk definition through control enforcement and ongoing governance.

- Purpose and Risk Scope: States why classification exists, identifies regulated data exposure, and defines the risk outcomes the policy is designed to control.

- Applicability and Coverage: Specifies systems, data types, business units, third parties, and jurisdictions governed by the policy, including exclusions and boundary conditions.

- Classification Framework: Defines classification levels, decision criteria, and required handling standards that convert risk ratings into enforceable controls.

- Governance and Accountability: Documents ownership, approval authority, escalation paths, and exception management tied to classification decisions.

- Maintenance and Evidence: Establishes review cadence, reclassification triggers, documentation requirements, and artifacts retained for audit and regulatory review.

A clear policy structure converts data risk into auditable controls. Without defined sections and evidence expectations, classification programs fail under scrutiny.

How Regulators and Auditors Evaluate Data Classification Policies

Regulators and auditors evaluate data classification policies by testing whether classification decisions are documented, consistently applied, and directly linked to enforceable controls rather than stated intent.

- Classification Evidence Traceability: Review whether datasets are mapped to documented classification decisions with timestamps, owners, and rationale that can be traced during examination.

- Control-to-Classification Alignment: Validate that access controls, encryption, logging, and retention settings differ materially by classification level and are technically enforced.

- Reclassification Governance: Examine how aggregation, system migrations, new data sources, or changes in use trigger mandatory reclassification and control updates.

- Exception Management Discipline: Assess whether deviations from classification rules are formally approved, time-bound, risk-accepted by accountable executives, and revisited.

- Operational Consistency Testing: Sample systems and workflows to confirm classification is applied uniformly across business units, vendors, and data environments.

Evaluation focuses on execution, not language. Classification policies pass scrutiny only when decisions, controls, and evidence remain aligned under real operating conditions.

Building defensible governance requires addressing regulatory risk across operations, data, and partners. This article outlines essential fintech compliance practices and the challenges organizations face.

Where Fractional Compliance Leadership Fits

Fractional compliance leadership becomes necessary when data classification decisions require senior regulatory judgment, cross-functional authority, and audit defensibility that operational teams or tooling alone cannot provide.

- Executive Risk Ownership Gaps: Provides accountable leadership when no full-time executive is designated to approve classification decisions, accept residual risk, or sign off on exceptions.

- Regulatory Design and Validation: Establishes classification frameworks that align with examiner expectations and validates them against current regulatory guidance before audits occur.

- Cross-Functional Enforcement Authority: Resolves conflicts between legal, IT, security, and business teams by setting binding classification standards tied to enterprise risk tolerance.

- Audit and Examination Readiness: Prepares classification evidence, decision records, and exception logs so organizations can withstand regulator and auditor scrutiny without reactive remediation.

- Scalable Governance During Growth: Maintains classification discipline during quick expansion, new product launches, acquisitions, or jurisdictional changes without delaying operations.

Fractional compliance leadership fills the gap between policy intent and regulatory defensibility. It guarantees data classification decisions remain authoritative, consistent, and audit-ready as complexity increases.

How Fraxtional Supports Data Classification Policy Execution

Data classification policies often fail at execution due to unclear ownership, inconsistent controls, or weak audit evidence. Fraxtional provides fractional compliance leadership to close these gaps and make classification programs defensible under scrutiny.

- Senior-Level Classification Oversight: Fraxtional’s fractional leaders act as accountable executives for classification decisions, exception approvals, and residual risk acceptance when internal ownership is limited.

- Regulatory-Aligned Policy Design: Classification frameworks are built and reviewed against examiner expectations across financial services, fintech, crypto, and payments environments.

- Control and Evidence Validation: Policies are mapped to actual access controls, encryption settings, logging, and retention mechanisms to ensure classification decisions are enforceable and testable.

- Audit and Sponsor Bank Readiness: Fraxtional prepares classification evidence, decision records, and exception logs so teams can respond confidently during audits and bank reviews.

- Scalable Governance Support: Ongoing fractional leadership supports reclassification, system changes, vendor onboarding, and growth without delaying operations or hiring full-time executives.

Speak with Fraxtional to review your data classification policy and strengthen its regulatory posture before your next audit or bank review.

Conclusion

Data classification policies fail or succeed at the moment they are tested, not when they are written. Audits, incidents, sponsor bank reviews, and transactions expose whether classification decisions were applied consistently, revisited as data changed, and supported by defensible evidence. Organizations that treat classification as a living control, rather than static documentation, retain clarity when scrutiny increases.

As regulatory expectations tighten and data environments grow more complex, many teams reach a point where internal ownership is stretched, and senior judgment becomes the limiting factor. That is where experienced, independent compliance leadership adds measurable value by validating policy design, closing execution gaps, and preparing classification programs to withstand examination.

If your organization needs senior-level guidance to review, strengthen, or operationalize its data classification policy, Fraxtional provides fractional compliance leadership built for regulated growth.

Speak with an expert at Fraxtional to assess your data classification readiness and regulatory posture.

FAQs

A data classification policy focuses on data elements and handling controls, while an information classification policy may also cover documents, reports, and derived outputs reviewed during audits.

A sample data classification policy can guide structure, but each entity must adjust classifications, controls, and ownership to reflect its specific regulatory exposure and data flows.

Failures often stem from outdated classifications, missing reclassification records, or controls that do not match documented classification levels during examiner testing.

A data classification policy template should be revisited after system migrations, vendor changes, data aggregation, or new regulatory guidance that alters risk thresholds.

Regulators use data classification policy examples to assess consistency of application, not creativity, comparing documented examples against actual system and access controls.

blogs

Don’t miss these

Let’s Get Started

Ready to Strengthen Your Compliance Program?

Take the next step towards expert compliance solutions. Connect with us today.